The Comforting Mirage of SEO A/B Testing

[ad_1]

SEO A/B testing is limiting your search growth.

I know, that statement sounds backward and wrong. Shouldn’t A/B testing help SEO programs identify what does and doesn’t work? Shouldn’t SEO A/B testing allow sites to optimize based on statistical fact? You’d think so. But it often does the opposite.

That’s not to say that SEO A/B testing doesn’t work in some cases or can’t be used effectively. It can. But it’s rare and my experience is SEO A/B testing is both applied and interpreted incorrectly, leading to stagnant, status quo optimization efforts.

SEO A/B Testing

The premise of SEO A/B testing is simple. Using two cohorts, test a control group against a test group with your changes and measure the difference in those two cohorts. It’s a simple champion, challenger test.

So where does it go wrong?

The Sum is Less Than The Parts

I’ve been privileged to work with some very savvy teams implementing SEO A/B testing. At first it seemed … amazing! The precision with which you could make decisions was unparalleled.

However, within a year I realized there was a very big disconnect between the SEO A/B tests and overall SEO growth. In essence, if you totaled up all of the SEO A/B testing gains that were rolled out it was way more than actual SEO growth.

just sayin’ pic.twitter.com/NgLkw1SPvD

— Luke Wroblewski (@LukeW) May 15, 2018

I’m not talking about the difference between 50% growth and 30% growth. I’m talking 250% growth versus 30% growth. Obviously something was not quite right. Some clients wave off this discrepancy. Growth is growth right?

Yet, wasn’t the goal of many of these tests to measure exactly what SEO change was responsible for that growth? If that’s the case, how can we blithely dismiss the obvious fact that actual growth figures invalidate that central tenant?

Confounding Factors

So what is going on with the disconnect between SEO A/B tests and actual SEO growth? There are quite a few reasons why this might be the case.

Some are mathematical in nature such as the winner’s curse. Some are problems with test size and structure. More often I find that the test may not produce causative changes in the time period measured.

A/A Testing

Many sophisticated SEO A/B testing solutions come with A/A testing. That’s good! But many internal testing frameworks don’t, which can lead to errors. While there are more robust explanations, A/A testing reveals whether your control group is valid by testing the control against itself.

If there is no difference between two cohorts of your control group then the A/B test gains confidence. But if there is a large difference between the two cohorts of your control group then the A/B test loses confidence.

More directly, if you had a 5% A/B test gain but your A/A test showed a 10% difference then you have very little confidence that you were seeing anything but random test results.

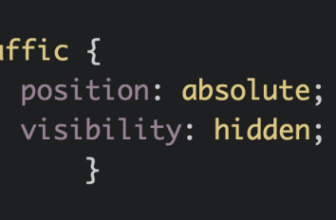

In short, your control group is borked.

Lots of Bork

There are a number of other ways in which your cohorts get get borked. Google refuses to pass a referrer for image search traffic. So you don’t really know if you’re getting the correct sampling in each cohort. If the test group gets 20% of traffic from image search but the control group gets 35% then how would you interpret the results?

Some wave away this issue saying that you assume the same distribution of traffic in each cohort. I find it interesting how many slip from statistical precision to assumption so quickly.

Do you also know the percentage of pages in each cohort that are currently not indexed by Google? Maybe you’re doing that work but I find most are not. Again, the assumption is that those metrics are the same across cohorts. If one cohort has a materially different percentage of pages out of the index then you’re not making a fact based decision.

Many of these potential errors can be reduced by increasing the sample size of the cohorts. That means very few can reliably run SEO A/B tests given the sample size requirements.

But Wait …

Maybe you’re starting to think about the other differences in each cohort. How many in each cohort have a featured snippet? What happens if the featured snippets change during the test? Do they change because of the test or are they a confounding factor?

Is the configuration of SERP features in each cohort the same? We know how radically different the click yield can be based on what features are present on a SERP. So how many Knowledge Panels are in each? How many have People Also Asked? How many have image carousels? Or video carousels? Or local packs?

Again, you have to hope that these are materially the same across each cohort and that they remain stable across those cohorts for the time the test is being run. I dunno, how many fingers and toes can you cross at one time?

Exposure

Sometimes you begin an SEO A/B test and you start seeing a difference on day one. Does that make sense?

It really shouldn’t. Because an SEO A/B test should only begin when you know that a material amount of both the test and control group have been crawled.

Google can’t have reacted to something that it hasn’t even “seen” yet. So more sophisticated SEO A/B frameworks will include a true start date by measuring when a material number of pages in the test have been crawled.

Digestion

What can’t be known is when Google actually “digests” these changes. Sure they might crawl it but when is Google actually taking that version of the crawl and updating that document as a result? If it identifies a change do you know how long it takes for them to, say, reprocess the language vectors for that document?

That’s all a fancy way of saying that we have no real idea of how long it takes for Google to react to document level changes. Mind you, we have a much better idea of when it comes to Title tags. We can see them change. And we can often see that when they change they do produce different rankings.

I don’t mind SEO A/B tests when it comes to Title tags. But it becomes harder to be sure when it comes to content changes and a fool’s errand when it comes to links.

The Ultimate SEO A/B Test

In many ways, true A/B SEO tests are core algorithm updates. I know it’s not a perfect analogy because it’s a pre versus post analysis. But I think it helps many clients to understand that SEO is not about any one thing but a combination of things.

More to the point, if you lose or win during a core algorithm update how do you match that up with your SEO A/B tests? If you lose 30% of your traffic during an update how do you interpret the SEO A/B “wins” you rolled out in the months prior to that update?

What we measure in SEO A/B tests may not be fully baked. We may be seeing half of the signals being processed or Google promoting the page to gather data before making a decision.

I get that the latter might be controversial. But it becomes hard to ignore when you repeatedly see changes produce ranking gains only to erode over the course of a few weeks or months.

Mindset Matters

The core problem with SEO A/B testing is actually not, despite all of the above, in the configuration of the tests. It’s in how we use the SEO A/B testing results.

Too often I find that sites slavishly follow the SEO A/B testing result. If the test produced a -1% decline in traffic that change never sees the light of day. If the result was neutral or even slightly positive it might not even be launched because it “wasn’t impactful”.

They see each test as being independent from all other potential changes and rely solely on the SEO A/B test measurement to validate success or failure.

When I run into this mindset I either fire that client or try to change the culture. The first thing I do is send them this piece on Hacker Noon about the difference between being data informed and data driven.

Because it is exhausting trying to convince people that the SEO A/B test that saw a 1% gain is worth pushing out to the rest of the site. And it’s nearly impossible in some environments to convince people that a -4% result should also go live.

In my experience SEO A/B test results that are between +/- 10% generally wind up being neutral. So if you have an experienced team optimizing a site you’re really using A/B testing as a way to identify big winners and big losers.

Don’t substitute SEO A/B testing results over SEO experience and expertise.

I get it. It’s often hard to gain the trust of clients or stakeholders when it comes to SEO. But SEO A/B testing shouldn’t be relied upon to convince people that your expert recommendations are valid.

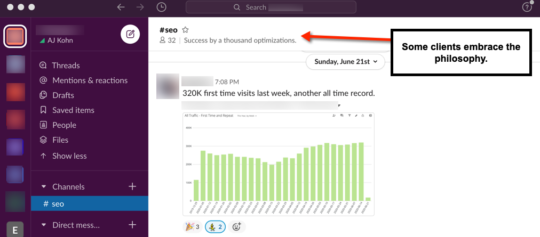

The Sum is Greater Than The Parts

Because the secret of SEO is the opposite of death by a thousand cuts. I’m willing to tell you this secret because you made it down this far. Congrats!

Clients often want to force rank SEO recommendations. How much lift will better alt text on images drive? I don’t know. Do I know it’ll help? Sure do! I can certainly tell you which recommendations I’d implement first. But in the end you need to implement all of them.

By obsessively measuring each individual SEO change and requiring it to obtain a material lift you miss out on greater SEO gains through the combination of efforts.

In a follow-up post I’ll explore different ways to measure SEO health and progress.

TL;DR

SEO A/B tests provide a comforting mirage of success. But issues with how SEO A/B tests are structured, what they truly measure and the mindset they usually create limit search growth.

The Next Post: What I Learned In 2020

The Previous Post: Rich Results Test Bookmarklets

[ad_2]

Source link